Message delivery and deduplication strategies

While working with distributed systems, different communication patterns are most likely the first ones you’ll learn. Most programmers are perfectly aware of existing options:

- at-most-once

- at-least-once

- and exactly-once delivery.

They are well documented in many different publications, so just to recap, with the at-most-once, a message can be lost. With the at-least-once, all messages will be delivered one or more times. And exactly-once delivery does not exist. Ok, that's not entirely true. Exactly once is not possible from a message delivery perspective, but when using techniques like message deduplication, we can achieve effectively-once, which is a far better name. The outcome or effect could be done once, that's doable.

at-most-once delivery

The at-most-once delivery semantics is very straightforward. I like to call it YOLO delivery. We can send a message from system A to B and we don't care if B receives it or not. Very useful for some massive data harvesting use cases, e.g. collecting web page clicks, tracking a vehicle, etc.

If you lose a message from time to time, either it's not a problem at all, or you can reconcile the state very easily.

at-least-once delivery

In this case, we need to be sure that a message was delivered. Let's say it's a money transfer request or shopping cart payment. Such messages cannot be lost because it will manifest in significant state inconsistencies, not to mention that it would ruin the company’s reputation, which nowadays is very important to sustain any business.

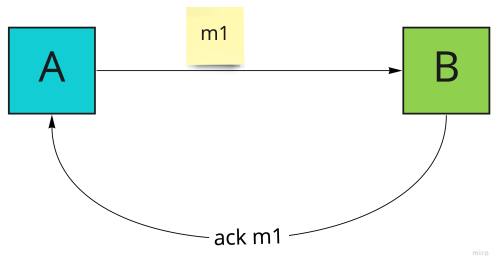

In most situations, the producer side (A) is responsible for achieving at-least-once delivery by waiting for acknowledgment from a consumer (B) that message (m1) was delivered. If not received, the producer will send the message again.

This is a standard approach and in most cases, it is a perfectly acceptable choice. If you need more details about the possible implementation, check out this blog about Transaction Outbox Pattern.

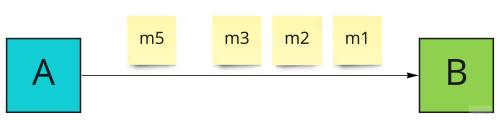

Many developers forget that we can reverse this dependency and make the consumer (B) responsible for at-least-once delivery guarantee. Suppose that the producer (A) sends messages m1, m2, m3 and because of some reasons, m4 is lost. The next message from the producer will be m5.

The consumer doesn't have to acknowledge anything, but it has to track the message sequence number. If it spots a gap, it should launch a redelivery strategy. The easiest one would be to ask the producer to send all messages again, starting from the missing offset.

This strategy is especially handy when you need to preserve the order of processing on the consumer side. Otherwise, we might asynchronously ask the producer only for missing offsets and continue the processing. For both cases, the offset value is very important.

If you are able to generate a strictly monotonically increasing sequence number without gaps, for each message, then the at-least-once delivery implementation will be rather easy. Sometimes it's a global sequence but very often the sequence is per domain aggregate, which is the state consistency guardian.

Be aware that if we allow gaps in the sequence from the producer side, which might be the case for multi-node setup, we should take it into consideration also on the consumer side. Redelivery logic will be more complex.

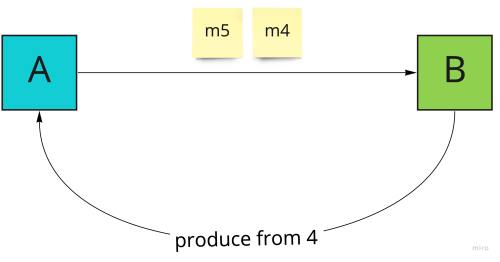

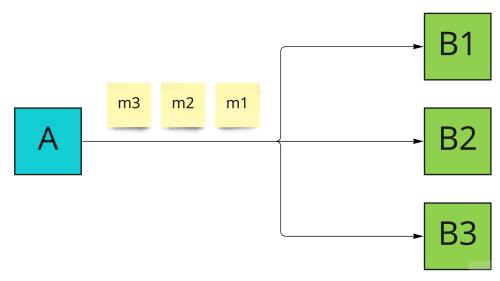

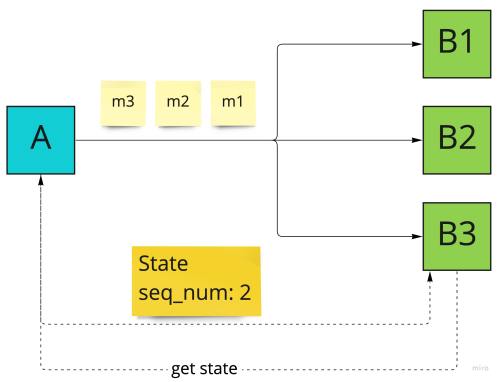

Sometimes a stream of messages cannot be "restarted". A fun-out communication is a good example here:

Consumers could be mobile devices that process a stream of state changes. This might be a WebSocket topic with order book state updates for an exchange system or a conversation state updates for a Whatsapp-like solution. One mobile device could for a moment loose the connection and miss some messages from the topic.

In this case, state reconciliation requires:

- buffering the main message stream,

- asking for the actual state with the current sequence number

- using the state and starting processing the messages stream from that sequence number (message m1 should be ignored)

It sounds like the consumer side at-least-once delivery required more work. That's true, but there are use cases where it's worth paying the price.

Generally speaking, this kind of at-least-once delivery strategy is less traffic-heavy. Reconciliation should be a relatively rare situation, so we don't have to pay a price for acknowledging each message. I find it especially useful when playing with WebSocket communication with many clients consuming the same stream of data.

effectively-once delivery with deduplication

How about the famous and so desired exactly/effectively-once delivery? Of course, we all know how to solve this. Each message should have some unique id that can be used to check if the message was consumed before. There are 2 gotchas here.

First of all, our effect must be persisted in one transaction with the unique id. In a pseudo-code this could look like:

begin transaction;

update something based on a given message;

save unique id from the message if not present;

end transaction;For a classic RDBMS, achieving this won't be a problem. A separate table with a unique constraint on the id column and we are ready to go. In case of processing the same message twice, the transaction will fail and we can assume that this message was processed before.

The only problem is that such deduplication is not so easy to implement with other storage solutions.

In Redis, transactions have slightly different semantics because it's a key-store and you should use the WATCH operator to simulate similar behaviour.

In distributed databases like Cassandra, some transaction support exists, but you should be very careful with it. Any update or insert with an IF statement launches a lightweight transaction under the hood. The name is very misleading, because it's a pretty heavy operation:

Lightweight transactions should not be used casually as the latency of operations increases fourfold due to the round-trips necessary between the CAS coordinators.Also in Cassandra (as in many distributed databases), you cannot update two tables in a transaction, simply because they can exist on two different nodes.

A piece of advice: make sure that your underlying store supports transactions as above before you promise to deliver effectively-once processing.

The second problem is that we cannot keep the unique ids forever. Either we hit a performance issue with a too big unique index or we hit a storage limit constraint, for data that is not business relevant. Because of that, we will keep unique ids from the last hour, week, month, or year depending on the requirements.

Make a plan to remove old ids automatically or manually, but also make sure that everyone understands the implications of a given deduplication strategy. This is especially important in case of some outages where our system is off for server minutes/hours and once back to life, it will try to reprocess some messages once again.

If we have at-least-once delivery coordinated on the consumer side, we could use sequence number for deduplication as well. Based on the fact that the next message should have a sequence number + 1, we need to store only the current sequence value. This kind of deduplication will not have any time/storage constraints.

However, as I already mentioned, the challenge is to generate a sequence number without gaps. Sometimes there could be a temptation to use existing technical fields for that, like a message offset from the Kafka broker. Unfortunately, this won't be a bulletproof deduplication because we can produce duplicates on the producer side.

Enabling idempotence will minimize the chances for duplicates, but won't eliminate it completely.

Summary

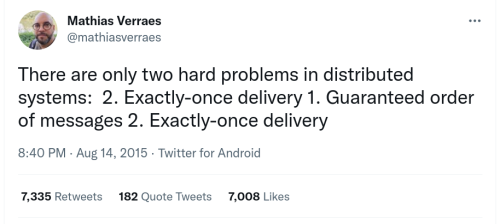

One of my favourite tweets about distributed systems is Mathias Verraes’ wordplay:

(source)

This beautiful metaphor should be above every programmer's desk. If this doesn't make you laugh and cry at the same time, then … eventually, it will. I just scraped the surface of possible problems and solutions with message delivery.

Now, it's your turn to dive deep and practice with them based on real-life examples.